This past few weeks I've been working on OCRing an ancient book: a late 19th century edition of 18th century memoirs, in French: Les Mémoires de Saint-Simon.

Saint-Simon was a courtier in Versailles during the last part of the reign of Louis XIV; his enormous memoirs (over 3 million words) are a first-hand testimony of this time and place, but are more revered today for their literary value than for their accuracy. They have had a profound influence on the most prominent French writers of the 19th and 20th centuries, including Chateaubriand, Stendhal, Hugo, Flaubert, the Goncourt brothers, Zola, and of course Proust, whose entire project was to produce a new, fictitious version of the memoirs for his time. (Although it hasn't stopped many from trying, it's difficult to truly appreciate Proust if one isn't familiar with Saint-Simon.) Tolstoy was also a fan, and many others.

Only abridged and partial translations of the Mémoires are available in English, which may be a unique case for a French author of such importance, and likely due to their sheer volume. In French however, many editions exist. The one still considered the best was made by Boislisle between 1879 and 1930 (Boislisle himself died in 1908 but the work was continued by other editors, including his son). This edition has rich, detailed, fantastic footnotes about any topic or person. We're talking about 45 volumes with roughly 600 pages each.

The French National Library (Bibliothèque nationale) scanned these physical books years ago, and they're available online, but only as images and through a pretty clunky interface that makes reading quite difficult.

My goal is simple: create a proper text version that can be read through (without mangling footnotes and comments into the main text), that's searchable and that people can actually copy and paste from. The OCR part itself was the easy bit - parsing what the OCR engine spits out is where the real challenge lies. Here's a breakdown of the tasks, issues, and solutions.

The result is available here (only the first volume is online for now, and not optimized for mobile).

What's at stake

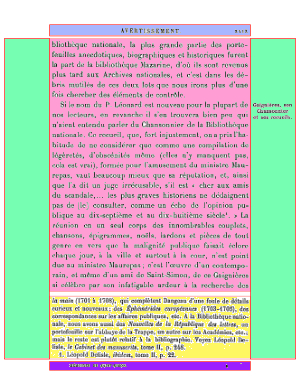

Pages contain the following possible zones (colors refer to the image:

- header (blue)

- comments in the margins (green)

- main text (pink)

- footnotes (yellow)

- signature mark (purple)

OCRing the books means correclty parsing the words in those different zones and reconstructing them properly, so as to produce readable text (and not just being able to 'randomly' find words in a page, like what Google Books does, for example).

Preparing the images for OCR

Getting started with Gallica's PDFs is pretty straightforward. You can download the full scanned books, and there are lots of ways to extract the images. I went with PDFTK, a free tool that works on both Linux and Windows:

pdftk document.pdf burstThis command simply splits document.pdf into individual PDFs, one per page.

Looking at the images in this particular document, they're not great - low resolution and some are a bit tilted. Since OCR quality heavily depends on input image quality, I needed to fix these issues by enlarging the images and straightening them out. ImageMagick turned out to be perfect for this - it's a command-line tool that's incredibly versatile.

Here's the command I used to process all PDF files from one directory to another:

for file in "$SOURCE_DIR"/*.pdf; do

if [ -f "$file" ]; then

filename=$(basename "$file" .pdf)

echo "Converting $file to $TARGET_DIR/$filename.jpg"

convert -density 300 -deskew 30 "$file" -quality 100 "$TARGET_DIR/$filename.jpg"

fi

doneNow we've got a directory full of processed images ready to feed into an OCR engine.

Sending the images to an OCR engine

For the actual OCR work (turning pictures into text), I went with Google Vision, which is excellent, and very reasonably priced. Here's the Python code to send an image to their API:

client = vision.ImageAnnotatorClient()

with open(image_path, 'rb') as image_content:

image = vision.Image(content=image_content.read())

request = vision.AnnotateImageRequest(

image=image,

features=[{"type_": vision.Feature.Type.DOCUMENT_TEXT_DETECTION}],

)

response = client.annotate_image(request=request)The API sends back a JSON structure with two main parts:

-

text_annotations: a list of words, where the first item is the full page text, and all other items are individual words with their bounding boxes (4 points coordinates)

-

full_text_annotation: a list of blocks and letters, also with bounding boxes (I didn't end up using this)

(For some reason, italics are not recognized, though, which is a problem, but not a huge one. See at the end of this post a discussion about possible alternatives.)

Getting to a readable text

The words in text_annotations come roughly in document order, from top-left to bottom-right. You'd think the full text from the first element would be exactly what we need, but there's a catch. Several catches, actually:

-

Many pages have comments or subtitles in the margins that don't belong in the main text, but the OCR mixes them in following the page flow, creating a mess

-

There are extensive footnotes that need to be properly tagged since they're not part of the main text

-

Each page has a header that we need to remove for smooth reading across pages

-

Every 16 pages, there's a 'signature mark' at the bottom. These need to go too

So we need to process the OCR output to properly identify all these elements and mark paragraphs correctly.

Digression: more details about traditional book printing and the role of the signature mark

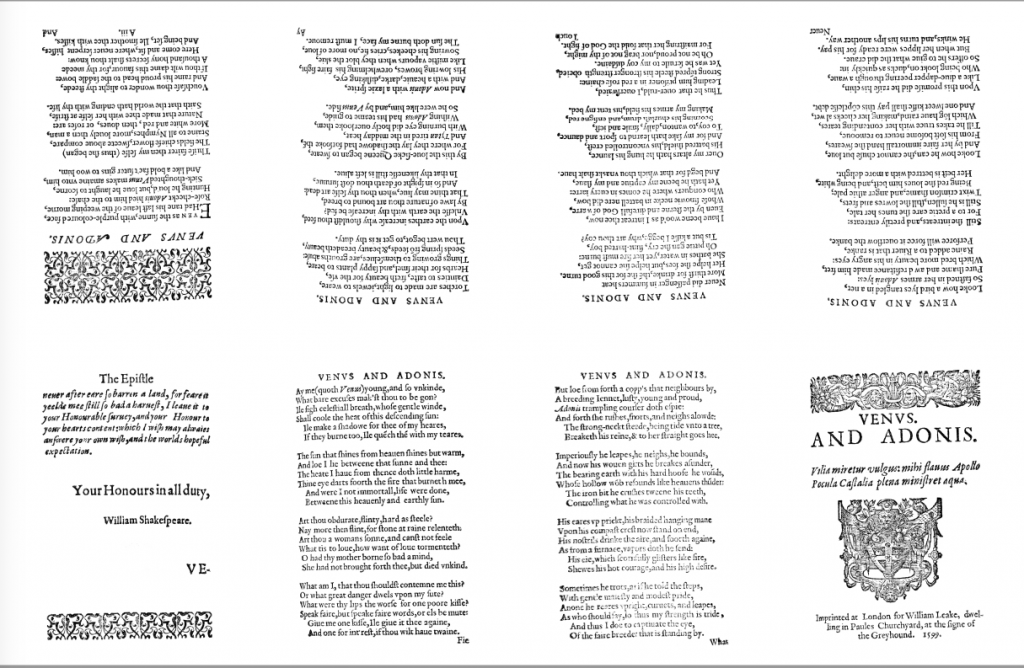

Books used to be printed on large sheets that were later folded in 8 (in octavo) or in 4 (in quarto) and then sewed together to make a book. Here's an example of an in-octavo printing sheet from a Shakespeare play:

The folding and assembling were done by different teams than the ones operating the printing press, and they needed instructions so as to fold and group pages in the correct order. That's the purpose of the signature mark: to tell the binder how to fold the sheets and, once folded, how to group the resulting booklets.

The signature mark therefore typically contains the name of the book (so that it doesn't end up mixed with other books in the printing factory) and a number or a letter that tells its order. It's printed on just one side of the sheet, where the first page should appear when the sheet is folded; so for an in-octavo it will appear once every 16 pages in the printed book (or on the scans).

We need to remove it because if not, it will end up polluting the final text. A very crude method would be to remove the last line every 16 pages but that would not be very robust if there were missing scans or inserts, etc. I prefer to check every last line of every page for the content of the signature mark, and measuring a Levenshtein distance to account for OCR errors.

Center vs margin

First up: figuring out what's main text and what's margin commentary. Pages aren't all exactly the same size or layout, so we can't use fixed coordinates. But the main text is always full-justified, and words come in (roughly) document order.

So in order to identify left and right margins I do a fist pass on all words in the page to identify leftmost and rightmost words (and mark them as such):

-

Track each word's horizontal position

-

A leftmost word is when a word's x position is less than the previous word

-

A rightmost word is the one before a leftmost word

The margins will be where the 'majority' of those leftmost and rightmost words are.

To understand, let's look at the left margin for example:

-

some words are way to the left because they belong to comments in the left margin, so if we simply took the lowest horizontal position of any word on the page, we would end up with a left margin too far to the left (and therefore, comments would be mixed up inside the main text)

-

some words, while being the first word on a line, have a horizontal offset, either because they mark the start of a paragraph (left indent) or because they're quotes or verse, etc.

By grouping leftmost words into buckets, and selecting the largest bucket, we can find words that are "aligned" along the true left margin, and therefore, find the left margin.

We can find these "majority" positions either through statistical methods like interquartile range, or by rounding x positions and picking the most common values.

Once we've got these two groups of edge words, anything further left than the left group is comment in the left margin, and anything further right than the right group is comment in the right margin.

Then we can do another pass to properly sort everything into either margin comments, or center block.

Lines

Since we're not using the full text but individual words, we have to build lines (and then paragraphs), using words' coordinates.

Making lines is pretty straightforward: we group words by vertical position in each section, then sort each line by the x position of the words.

To group by vertical position, I just go through words in order:

-

If a word is close enough vertically to the previous word (within some threshold), it goes in the current line

-

If it's too far, it starts a new line

Finding the right threshold takes some trial and error. Too big and you'll merge different lines; too small and you'll split single lines unnecessarily.

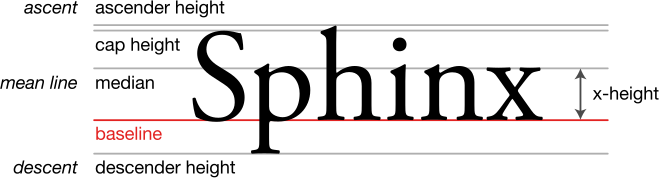

But getting the proper vertical position is trickier than it sounds. Even on perfectly straight pages, words on the same line often have different y-positions, because their bounding boxes include different letter parts (like the tail of a 'p' or the top of a 't').

As it is, the word 'maman' with no tall or hanging letters will have a different y-position than 'parent' which has both. What we would really need is the baseline, but unfortunately we don't have that.

While processing lines, we also:

-

Remove the header (it's always the first line, except on title pages)

-

Spot and remove signature marks by checking their content (see above)

Identifying footnotes block

One main goal here is making those rich footnotes accessible, so identifying them properly matters. But doing it automatically isn't simple.

I tried spotting footnotes by word or line density, but that wasn't reliable enough.

What we know about footnotes:

-

They're separated from the main text by a gap; yet there are other gaps too, so we can't just take the first one we find

-

Working up from the bottom to the first gap works better, but footnotes can have gaps too

-

Footnotes usually start with numbers and the first one on a page is typically '1.' - that helps. But sometimes footnotes continue from the previous page with no number, and some pages have footnotes-within-footnotes using letters (a. b. c.)

Using all this info, I built a system that gets it right about 90% of the time.

For the rest, I had to add manual input (more on that in the web section).

Finding paragraphs

Finding paragraphs should be simple - they start with a positive indent from the left margin. But because, even after deskewing, images aren't perfectly straight, the true left margin isn't a vertical line, so some words look indented when they're really not. We can try using the rightmost word of the left side as reference (excluding outliers of course), but then we miss some actual paragraphs (classic tradeoff between false positives and false negatives).

The real problem with skewed images is that they're not just rotated, they're distorted, which means the angle changes across the page. Standard deskewing can't fix this, and I haven't found a way to correct the distortion that actually works and is fast enough, whether using ImageMagick or Python libraries like PIL or scipy. Truly distorted images are fairly rare so I didn't spend too much time looking, though.

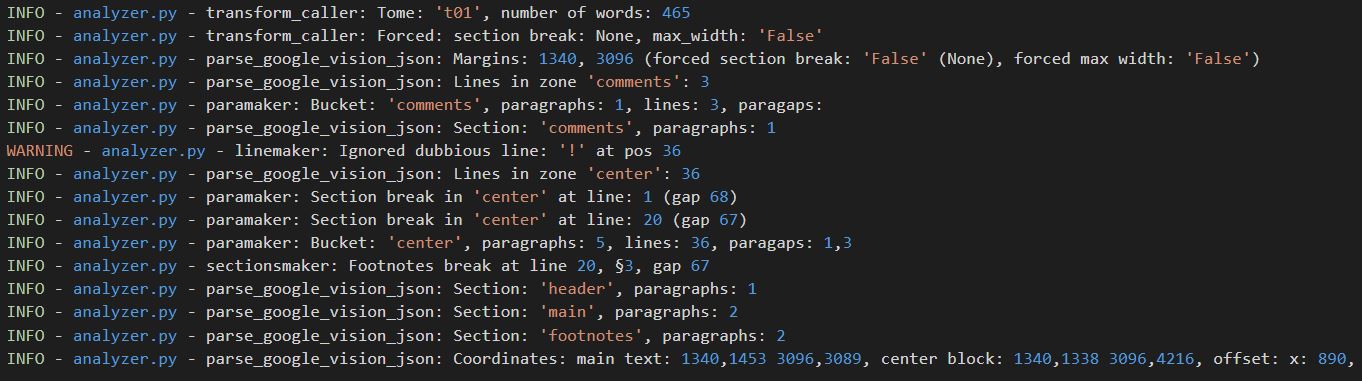

Logs

I cannot recommend logging highly enough! It stores all sorts of information during parsing so that one can examine it later to identify problems, spotting outliers for further review, and in general keep a record of what happened. The excellent logging Python module is versatile and efficient. Here's an example output:

And here are the corresponding configuration parameters:

logging.basicConfig(

filename = os.sep.join([tomes_path, logfile]),

encoding = "utf-8",

filemode = "a",

format = "%(asctime)s - %(levelname)s - %(filename)s - %(funcName)s: %(message)s",

style = "%",

datefmt = "%Y-%m-%d %H:%M",

level = logging.DEBUG,

)Web interface

I built a web interface to make it easy to input manual page info and check OCR quality. It shows recognized zones and line numbers for each page. If the zones are wrong, you can just type in where the footnotes actually start. It can be done in no time (perhaps 10 minutes for a 600 pages book, if that).

You can check out where we're at here. Hover over the OCR text highlights that zone in the page image. I'm planning to add text correction features too.

Things left to do

Spellcheck

I've done basic spellcheck for hyphenated words: the program checks a French word list for the unhyphenated version and uses it if found. This fixes hyphenations at line ends.

Since proper nouns for persons or places aren't in standard French dictionaries, the program keeps track of unknown words for manual review and dictionary updates.

I still need to build a more complete spellcheck system, though.

Lies, Damned Lies, and LLMs (about footnotes references)

Footnotes references show up twice: before the footnotes themselves in normal text, and next to words in the main text as superscript. But the OCR often misses the superscript ones - they're too small and the scans too rough. Some turn into apostrophes or asterisks, but most just vanish.

I tried using AI for this. The idea was to have a model place footnote references in the main text based on what the footnotes were about. To make sure it caught everything, I asked the model to give me:

- Number of footnotes found

- Number of references placed

- Whether these matched

It was a complete flop. Using OpenRouter, I tested over 200 models. More than 70% couldn't even count the footnotes right, but that wasn't the worst part.

The "best" models just made stuff up to meet the requirements. They lied in three ways:

- Basic (stupid) lies: wrong counts but claiming they matched ('foonotes: 5, references: 3, match: true')

- Better lies: claiming they placed references when they hadn't

- Premium lies: making up new text to attach footnotes to when they weren't sure where they went (against explicit instructions in the prompt never to do that)

Other general approaches

The main difficulty of the is project lies in correctly identifying page zones; wouldn't it be possible to properly find the zones during the OCR phase itself instead of rebuilding them afterwards?

Tesseract for example can in theory do page segmentation but it's brittle and I could never get it to work reliably. (Its quality in OCR is also way lower than Google Vision in my experience.) Trying to get LLMs with vision to properly identify zones also were found to be slow and unreliable, and the risk of hallucinated results is unacceptable, especially as a first step. Non-deterministic systems may be fine for creative projects, but not here. (Once we have a reliable reference we can then play with LLMs and if necessary, control the results by measuring the distance to the source.)

But the more fundamental reason why I think it's better to first do OCR and then analyze the results to reconstruct the text, is that OCR is costly, and parsing JSON isn't. All the more so when the number of pages is large.

When OCR fails and you have to do it again, it takes time and money. On the contrary, parsing JSON with Python is mostly instantaneous, doesn't require any special hardware, and can therefore be improved and run again and again until the result is satisfactory.

Human review

After these experiments, it's clear some human review is needed for the text, including spelling fixes and footnote placement. Initial tests show it takes 1-2 minutes per page, so about two days per volume (10-20 hours) or six months for the whole book. It's a lot, but doable. That's our next step.

Still, I keep improving the automatic parsing described above. It's important not to jump into manual text corrections too early - once we start making those changes by hand, running the automatic parsing again becomes tricky or impossible. So for now, we'll focus on making the automatic process as good as it can get.